Hallucination-Proof AI Training Platform (Feb 2026)

Hallucination-Proof AI Training Platform (Feb 2026)

Dec 8, 2025

Dec 8, 2025

Healthcare and life sciences teams share a difficult challenge: Hallucination-proof AI tools that speak with confidence but pull details from thin air. When training covers drug protocols, device instructions, or surgical steps, every line must tie back to an approved source. New AI accuracy systems now check each statement against your own documents before anything reaches learners, cutting out guesswork and unsupported claims. The stakes are high (FDA scrutiny, audit failures, and in the most serious situations, patient risk), so choosing an approach that keeps information anchored to verified materials is no longer optional.

TLDR:

Hallucination-proof AI prevents fabricated training content by grounding every claim in your verified source documents

A leading enterprise-grade system traces each training statement back to specific pages in your clinical protocols and SOPs

Real-time analytics identify knowledge gaps per learner and question to catch compliance risks before audits

A top medical-ready tool converts 5,000+ pages into courses with high accuracy and strong completion rates across large enrollments

Multi-channel delivery reaches clinicians and staff through MS Teams, SMS, or Slack without extra steps

What Are AI Hallucinations and Why They Matter in Controlled Industries

AI hallucinations occur when an LLM generates content that sounds credible but isn't based on verified sources. The model invents facts, fills gaps with plausible information, or misinterprets data.

In healthcare, pharmaceuticals, and life sciences, this creates serious liability. Training materials must reflect current protocols, clinical guidelines, and compliance requirements exactly. A single error in medical training can cause patient harm, regulatory violations, or failed audits.

Stanford researchers found that even retrieval-augmented models like GPT-4 with internet access made unsupported clinical assertions in nearly one-third of cases when tested on medical queries.

Why Controlled Industries Require Hallucination-Proof Training

Regulatory bodies like the FDA require training materials to be traceable, verifiable, and aligned with current clinical guidelines. Audit teams review this trail during inspections.

Traditional AI tools generate content probabilistically, which fails to meet compliance standards. When training covers drug interactions, surgical protocols, or diagnostic criteria, regulators don't accept "the AI said so" as documentation.

The financial stakes are real. FDA warning letters can halt product launches. Failed audits trigger corrective action plans that cost millions in delayed timelines. In extreme cases, inaccurate training contributes to adverse events that result in legal liability.

Patient safety depends on clinicians and life sciences teams having access to accurate information. Training content that contains factual errors can lead to medication mistakes or protocol deviations.

What Makes Training AI Hallucination-Proof?

Three mechanisms help prevent AI from fabricating information: source grounding, verification loops, and referencing systems.

Source grounding steers the AI to generate content from documents you provide, heavily focusing on those sources over its general training data. The system retrieves information primarily from your uploaded files, SOPs, or approved clinical guidelines and uses its general training mainly for structure, phrasing, and synthesis.

Retrieval-augmented generation adds a search layer where the AI locates verified sources first, then generates responses anchored to those passages. This prevents the model from inventing information when gaps exist.

Human-in-the-loop validation requires subject matter experts to review outputs before deployment. Automated systems flag any content lacking clear source citations for manual review.

Referencing systems trace every claim back to its origin document. Medical-grade AI tracks which page, section, and version generated each training statement, creating the audit trail compliance teams need during inspections.

Referencing Agent for Medical-Grade Accuracy

A Referencing Agent can verify every training statement against your source documents before deployment. When regulatory consequences follow a single error, this verification step is designed to catch content that lacks clear source attribution, helping mitigate the risk of ungrounded content reaching learners.

Upload your clinical protocols, SOPs, or compliance materials. The system converts up to 5,000 pages into interactive courses while cross-checking each generated claim against your original documents. Content lacking clear source attribution gets flagged automatically.

The medical referencing layer tracks which section, page, and version of your source material generated each training statement. During FDA inspections or internal audits, you trace every piece of training content back to its approved source.

The engine restricts generation to your verified documents only. If information doesn't exist in your uploaded materials, the system won't fabricate an answer.

Multi-Format Content Verification Features

A Creator Agent generates courses, communications, and surveys by pulling from your uploaded files and HRIS data. This input-first approach reduces hallucination risk because the AI relies more on information that exists in your source materials than on free-forming new facts from its general training.

When you request content for different cohorts, the system customizes based on role data and performance metrics from your HRIS. Each variation stays anchored to your verified documents. A sales rep and a clinical specialist receive different examples, but both versions trace back to approved sources.

Translation into 30+ languages maintains source alignment. The system translates your verified content instead of regenerating explanations in each language, preserving factual accuracy across linguistic versions.

Assessments are built from your source documentation. Quiz questions pull from specific sections of your materials, and correct answers are verified against those same passages.

Real-Time Knowledge Gap Analytics for Compliance Tracking

Knowledge Gap Analytics track comprehension at four levels: individual learner, lesson module, specific question, and entire cohort. When a critical concept shows low mastery rates, you can spot the gap immediately.

Real-time tracking shows which surgical procedures, drug interaction guidelines, or safety protocols need reinforcement before someone applies that knowledge in the field.

Per-question analytics reveal where training content might lack clarity. If 40% of learners miss the same compliance question, you can investigate whether the source material needs clarification or the assessment requires revision.

The system flags learners who show knowledge gaps in high-stakes areas. You can trigger refresher training or additional verification before they work independently, reducing the risk of protocol deviations during regulatory inspections.

Completion Rates: Why Accuracy Drives Engagement

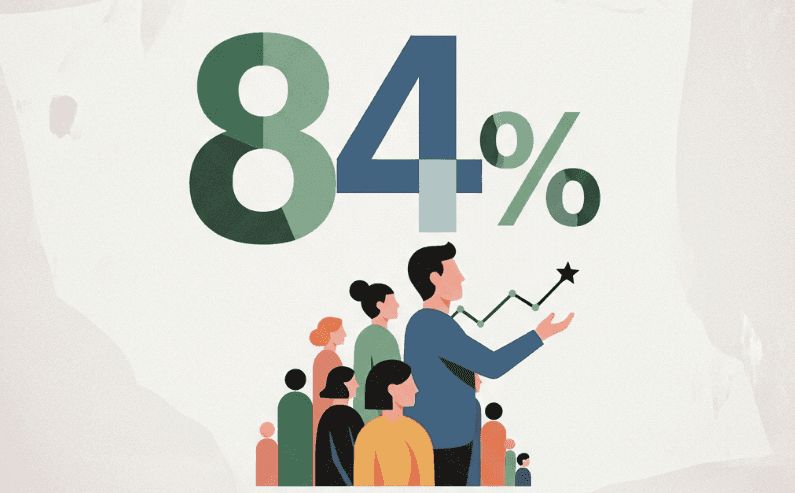

Learners in life sciences and healthcare complete training at an 84% rate, with 8/10 reporting positive sentiment across more than 170,000 enrollments. People trust training that won't mislead them.

When clinicians and compliance teams encounter AI-generated content, skepticism runs high. They've seen fabricated citations and plausible-sounding errors in other tools. Hallucination-proof content eliminates that doubt because learners recognize the material matches their approved protocols and clinical guidelines exactly.

Verified accuracy shortens the mental friction between receiving training and acting on it. In high-stakes roles, learners won't apply knowledge they question. When they trust the source, completion follows naturally.

Spaced Learning for Long-Term Retention in High-Stakes Roles

Spaced learning distributes training over weeks or months instead of in single sessions. This approach can considerably increase retention compared to traditional methods.

Healthcare and pharmaceutical teams must recall protocols without reference materials during patient care. Surgical nurses need instant recall of sterile techniques. Pharmaceutical representatives must remember drug contraindications during physician meetings.

Compliance audits happen without warning. When regulators ask about cold chain protocols or adverse event reporting, teams need accurate recall of training from months earlier.

Cramming fails because long-term memory requires repeated exposure over time. Spacing lessons across weeks creates recall pathways that persist during high-stakes situations.

Personal Device Compliance without Compromising Security

Frontline workers in healthcare and life sciences rarely have corporate devices but still need compliance training. BYOD approaches create security risks when training involves PHI or proprietary drug information.

Personal device compliance tech encrypts phone numbers automatically when learners enroll via SMS. Training reaches employees on their phones without exposing personal contact information in corporate systems.

Toll-free delivery sends training messages from secure numbers instead of internal systems, preventing data leakage. Many AI compliance frameworks and privacy best practices for controlled industries strongly recommend separating personal identifiers from training data, and this architecture supports that separation.

FLSA-compliant shift locking prevents training from deploying during off-hours when hourly workers shouldn't be working. The system schedules lessons within approved shift times, avoiding wage violations while maintaining training cadence.

Why Enterprises Choose Arist

Arist is the leading AI training software built for accuracy, speed, and scale. Its hallucination-proof AI securely turns 5,000+ pages of SOPs, clinical protocols, and guidelines into verified courses, comms, and assessments, all in one click. Every statement is checked by the Referencing Agent, which ties content back to specific pages and versions of your documents for audit-ready traceability.

Used by Fortune 500 frontline teams, sales teams, HR/L&D, and managers, Arist delivers continuous enablement through MS Teams, SMS, and Slack, driving 95%+ adoption within minutes and 84% completion rates across 170,000+ enrollments.

Key advantages include:

Hallucination-Proof AI that only generates content grounded in your approved documents

AI Course Creator for instant, high-accuracy content conversion

Medical-grade Referencing for healthcare and life sciences teams

Spaced learning + message-based microlearning for long-term retention

Knowledge Gap Analytics to identify compliance and skill risks in real time

LMS/HRIS integrations + automated triggers for just-in-time training

Personal device compliance with encrypted delivery and shift locking

FAQs

What is hallucination-proof AI and how does it work in training platforms?

Hallucination-proof AI steers content generation toward your verified source documents, dramatically reducing the risk of invented information. It uses source grounding, retrieval-augmented generation, and referencing systems that trace every training statement back to specific pages in your uploaded materials.

How can I verify that AI-generated training content meets FDA audit requirements?

Look for platforms with medical referencing capabilities that track which section, page, and version of your source material generated each training statement. This creates the audit trail regulators need during inspections, allowing you to trace every piece of content back to approved sources.

When should controlled industries consider switching to hallucination-proof training platforms?

If your current training tools generate content without clear source attribution or you face upcoming regulatory audits where training accuracy will be assessed. Stanford research found that even advanced AI models made unsupported clinical assertions in nearly one-third of medical queries.

Final thoughts on AI accuracy in healthcare and life sciences training

AI accuracy in healthcare and life sciences training is important, and a hallucination-proof AI solution like Arist is designed to keep training content tightly aligned with approved clinical guidelines and SOPs. By drawing from your uploaded materials and flagging missing attribution, Arist removes the risks that come from AI guessing its way through medical content. Your audit trail stays intact, your teams learn from verified information, and you avoid the gaps that lead to compliance issues.

Healthcare and life sciences teams share a difficult challenge: Hallucination-proof AI tools that speak with confidence but pull details from thin air. When training covers drug protocols, device instructions, or surgical steps, every line must tie back to an approved source. New AI accuracy systems now check each statement against your own documents before anything reaches learners, cutting out guesswork and unsupported claims. The stakes are high (FDA scrutiny, audit failures, and in the most serious situations, patient risk), so choosing an approach that keeps information anchored to verified materials is no longer optional.

TLDR:

Hallucination-proof AI prevents fabricated training content by grounding every claim in your verified source documents

A leading enterprise-grade system traces each training statement back to specific pages in your clinical protocols and SOPs

Real-time analytics identify knowledge gaps per learner and question to catch compliance risks before audits

A top medical-ready tool converts 5,000+ pages into courses with high accuracy and strong completion rates across large enrollments

Multi-channel delivery reaches clinicians and staff through MS Teams, SMS, or Slack without extra steps

What Are AI Hallucinations and Why They Matter in Controlled Industries

AI hallucinations occur when an LLM generates content that sounds credible but isn't based on verified sources. The model invents facts, fills gaps with plausible information, or misinterprets data.

In healthcare, pharmaceuticals, and life sciences, this creates serious liability. Training materials must reflect current protocols, clinical guidelines, and compliance requirements exactly. A single error in medical training can cause patient harm, regulatory violations, or failed audits.

Stanford researchers found that even retrieval-augmented models like GPT-4 with internet access made unsupported clinical assertions in nearly one-third of cases when tested on medical queries.

Why Controlled Industries Require Hallucination-Proof Training

Regulatory bodies like the FDA require training materials to be traceable, verifiable, and aligned with current clinical guidelines. Audit teams review this trail during inspections.

Traditional AI tools generate content probabilistically, which fails to meet compliance standards. When training covers drug interactions, surgical protocols, or diagnostic criteria, regulators don't accept "the AI said so" as documentation.

The financial stakes are real. FDA warning letters can halt product launches. Failed audits trigger corrective action plans that cost millions in delayed timelines. In extreme cases, inaccurate training contributes to adverse events that result in legal liability.

Patient safety depends on clinicians and life sciences teams having access to accurate information. Training content that contains factual errors can lead to medication mistakes or protocol deviations.

What Makes Training AI Hallucination-Proof?

Three mechanisms help prevent AI from fabricating information: source grounding, verification loops, and referencing systems.

Source grounding steers the AI to generate content from documents you provide, heavily focusing on those sources over its general training data. The system retrieves information primarily from your uploaded files, SOPs, or approved clinical guidelines and uses its general training mainly for structure, phrasing, and synthesis.

Retrieval-augmented generation adds a search layer where the AI locates verified sources first, then generates responses anchored to those passages. This prevents the model from inventing information when gaps exist.

Human-in-the-loop validation requires subject matter experts to review outputs before deployment. Automated systems flag any content lacking clear source citations for manual review.

Referencing systems trace every claim back to its origin document. Medical-grade AI tracks which page, section, and version generated each training statement, creating the audit trail compliance teams need during inspections.

Referencing Agent for Medical-Grade Accuracy

A Referencing Agent can verify every training statement against your source documents before deployment. When regulatory consequences follow a single error, this verification step is designed to catch content that lacks clear source attribution, helping mitigate the risk of ungrounded content reaching learners.

Upload your clinical protocols, SOPs, or compliance materials. The system converts up to 5,000 pages into interactive courses while cross-checking each generated claim against your original documents. Content lacking clear source attribution gets flagged automatically.

The medical referencing layer tracks which section, page, and version of your source material generated each training statement. During FDA inspections or internal audits, you trace every piece of training content back to its approved source.

The engine restricts generation to your verified documents only. If information doesn't exist in your uploaded materials, the system won't fabricate an answer.

Multi-Format Content Verification Features

A Creator Agent generates courses, communications, and surveys by pulling from your uploaded files and HRIS data. This input-first approach reduces hallucination risk because the AI relies more on information that exists in your source materials than on free-forming new facts from its general training.

When you request content for different cohorts, the system customizes based on role data and performance metrics from your HRIS. Each variation stays anchored to your verified documents. A sales rep and a clinical specialist receive different examples, but both versions trace back to approved sources.

Translation into 30+ languages maintains source alignment. The system translates your verified content instead of regenerating explanations in each language, preserving factual accuracy across linguistic versions.

Assessments are built from your source documentation. Quiz questions pull from specific sections of your materials, and correct answers are verified against those same passages.

Real-Time Knowledge Gap Analytics for Compliance Tracking

Knowledge Gap Analytics track comprehension at four levels: individual learner, lesson module, specific question, and entire cohort. When a critical concept shows low mastery rates, you can spot the gap immediately.

Real-time tracking shows which surgical procedures, drug interaction guidelines, or safety protocols need reinforcement before someone applies that knowledge in the field.

Per-question analytics reveal where training content might lack clarity. If 40% of learners miss the same compliance question, you can investigate whether the source material needs clarification or the assessment requires revision.

The system flags learners who show knowledge gaps in high-stakes areas. You can trigger refresher training or additional verification before they work independently, reducing the risk of protocol deviations during regulatory inspections.

Completion Rates: Why Accuracy Drives Engagement

Learners in life sciences and healthcare complete training at an 84% rate, with 8/10 reporting positive sentiment across more than 170,000 enrollments. People trust training that won't mislead them.

When clinicians and compliance teams encounter AI-generated content, skepticism runs high. They've seen fabricated citations and plausible-sounding errors in other tools. Hallucination-proof content eliminates that doubt because learners recognize the material matches their approved protocols and clinical guidelines exactly.

Verified accuracy shortens the mental friction between receiving training and acting on it. In high-stakes roles, learners won't apply knowledge they question. When they trust the source, completion follows naturally.

Spaced Learning for Long-Term Retention in High-Stakes Roles

Spaced learning distributes training over weeks or months instead of in single sessions. This approach can considerably increase retention compared to traditional methods.

Healthcare and pharmaceutical teams must recall protocols without reference materials during patient care. Surgical nurses need instant recall of sterile techniques. Pharmaceutical representatives must remember drug contraindications during physician meetings.

Compliance audits happen without warning. When regulators ask about cold chain protocols or adverse event reporting, teams need accurate recall of training from months earlier.

Cramming fails because long-term memory requires repeated exposure over time. Spacing lessons across weeks creates recall pathways that persist during high-stakes situations.

Personal Device Compliance without Compromising Security

Frontline workers in healthcare and life sciences rarely have corporate devices but still need compliance training. BYOD approaches create security risks when training involves PHI or proprietary drug information.

Personal device compliance tech encrypts phone numbers automatically when learners enroll via SMS. Training reaches employees on their phones without exposing personal contact information in corporate systems.

Toll-free delivery sends training messages from secure numbers instead of internal systems, preventing data leakage. Many AI compliance frameworks and privacy best practices for controlled industries strongly recommend separating personal identifiers from training data, and this architecture supports that separation.

FLSA-compliant shift locking prevents training from deploying during off-hours when hourly workers shouldn't be working. The system schedules lessons within approved shift times, avoiding wage violations while maintaining training cadence.

Why Enterprises Choose Arist

Arist is the leading AI training software built for accuracy, speed, and scale. Its hallucination-proof AI securely turns 5,000+ pages of SOPs, clinical protocols, and guidelines into verified courses, comms, and assessments, all in one click. Every statement is checked by the Referencing Agent, which ties content back to specific pages and versions of your documents for audit-ready traceability.

Used by Fortune 500 frontline teams, sales teams, HR/L&D, and managers, Arist delivers continuous enablement through MS Teams, SMS, and Slack, driving 95%+ adoption within minutes and 84% completion rates across 170,000+ enrollments.

Key advantages include:

Hallucination-Proof AI that only generates content grounded in your approved documents

AI Course Creator for instant, high-accuracy content conversion

Medical-grade Referencing for healthcare and life sciences teams

Spaced learning + message-based microlearning for long-term retention

Knowledge Gap Analytics to identify compliance and skill risks in real time

LMS/HRIS integrations + automated triggers for just-in-time training

Personal device compliance with encrypted delivery and shift locking

FAQs

What is hallucination-proof AI and how does it work in training platforms?

Hallucination-proof AI steers content generation toward your verified source documents, dramatically reducing the risk of invented information. It uses source grounding, retrieval-augmented generation, and referencing systems that trace every training statement back to specific pages in your uploaded materials.

How can I verify that AI-generated training content meets FDA audit requirements?

Look for platforms with medical referencing capabilities that track which section, page, and version of your source material generated each training statement. This creates the audit trail regulators need during inspections, allowing you to trace every piece of content back to approved sources.

When should controlled industries consider switching to hallucination-proof training platforms?

If your current training tools generate content without clear source attribution or you face upcoming regulatory audits where training accuracy will be assessed. Stanford research found that even advanced AI models made unsupported clinical assertions in nearly one-third of medical queries.

Final thoughts on AI accuracy in healthcare and life sciences training

AI accuracy in healthcare and life sciences training is important, and a hallucination-proof AI solution like Arist is designed to keep training content tightly aligned with approved clinical guidelines and SOPs. By drawing from your uploaded materials and flagging missing attribution, Arist removes the risks that come from AI guessing its way through medical content. Your audit trail stays intact, your teams learn from verified information, and you avoid the gaps that lead to compliance issues.

Related Resources

Article

Employee Engagement Courses: Complete Guide and Best Options in January 2026

Compare employee engagement courses, certifications, and training programs for managers in January 2026. Find formats with 90%+ completion rates and proven ROI.

Read more

Article

Training and Communication: 7 Essential Strategies for January 2026

Learn 7 training and communication strategies for January 2026. Microlearning, active listening, and SMS training boost completion rates to 95%+ for all teams.

Read more

Bring real impact to your people

We care about solving meaningful problems and being thought partners first and foremost. Arist is used and loved by the Fortune 500 — and we'd love to support your goals.

Curious to get a demo or free trial? We'd love to chat:

Bring real impact to your people

We care about solving meaningful problems and being thought partners first and foremost. Arist is used and loved by the Fortune 500 — and we'd love to support your goals.

Curious to get a demo or free trial? We'd love to chat:

Bring real impact to your people

We care about solving meaningful problems and being thought partners first and foremost. Arist is used and loved by the Fortune 500 — and we'd love to support your goals.

Curious to get a demo or free trial? We'd love to chat: